When I learned to program a computer, my university only owned ONE computer. It was an IBM 1620 digital computer. It had a memory bank made up of tiny magnets strung on wires that provided 20,000 storage positions. We talked to it via punched cards and a typewriter. It was wonderful.

Today I have a phone that has 128 billion bytes of memory and it can do things I never dreamed of when I was typing in machine language instructions to the 1620.

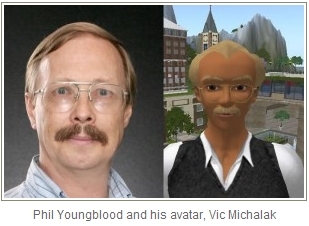

I wondered what the current trends and issues might be in computer science so I reached out to Dr. Vic Michalak (SL avatar for Dr. Philip Youngblood) for his take on what’s hot in computer science today.

“Two things come to mind off the top of my head: robotics and and quantum computing,” he said.

“Robotics is the use of computers to control moving things, not just stay in one place and ‘think.’ Like expert systems, robots have been around for a long time in our imaginations and for decades in primitive programmable systems. The first real use of robots that had a large-scale economic and social impact was their use in the car manufacturing industry starting in the 1960’s, and really coming into the social conscience with real social and economic impact in the late 1900’s. The next use of real social impact is that of autonomous vehicles in the 2000’s. Obviously this has yet to play out.

The reason why I chose them as so important and a ‘best example of research’ is the most recent and near future advances where AI and robotics come together. These are not just mechanical arms that spray paint on cars to free humans from having to do repetitive or hazardous activities, but human (or animal) surrogates that can negotiate unknown terrain, buildings, and objects to sense the environment in a collapsed building and find and rescue humans trapped there. It is still a decade or more away, but in the not-too-distant future we will have a variety of intelligent, autonomous vehicles and, not much later, robots as personal companions, servants, or intimate partners.

The reason why I chose them as so important and a ‘best example of research’ is the most recent and near future advances where AI and robotics come together. These are not just mechanical arms that spray paint on cars to free humans from having to do repetitive or hazardous activities, but human (or animal) surrogates that can negotiate unknown terrain, buildings, and objects to sense the environment in a collapsed building and find and rescue humans trapped there. It is still a decade or more away, but in the not-too-distant future we will have a variety of intelligent, autonomous vehicles and, not much later, robots as personal companions, servants, or intimate partners.

Describing quantum computers takes some background information first… All computers today basically work like a telegraph from the mid-1800’s, that is, electricity is turned on and off (creating two ‘states’ such as on=1 and off=0) to create patterns that represented more complex concepts such as letters or numbers (such as 10=A or 111=S). Later, changing properties of electromagnetic waves (early 1900’s technology) would be used to do the same thing. Still later, different patterns of on/off represented colors, sounds, and pictures. That is pretty much what lay people need to know about computers. Oh, maybe one other thing. Since the 1950’s, computer scientists and engineers have been able to make components smaller and smaller until key components like transistors are so small that their smallest parts are only a few atoms across. But transistors, which create the 1’s and 0’s we use today, still only control whether electricity is on or off.

So what can we do if we cannot make transistors any smaller? We use atoms themselves as transistors. Just like we control electricity (on or off) or the properties of electromagnetic waves, we are learning to control how to change atomic properties quickly and in a coordinated fashion so that we can create patterns to represent the same things that traditional computers do. There is one major difference though. Traditional computers can do only one thing at a time, just like the human brain. Computers and brains can divide up some tasks, and they can create the illusion of doing more than one thing at a time by doing one tiny thing for one task and then quickly switch to doing another tiny thing for another task and switch back and forth quickly, but quantum computers can truly do a lot of things at the same time. That means that, as they become more capable, quantum computers will soon be able to rather soon exceed that capabilities of both traditional computers as well as thinking animals like humans.”

When I learned to program computers, my university did not have a computer science program. We were in the mathematics department and working at very applied levels. My area was statistical analysis. I have always wondered if programming was a trade or if programmers were integral to the science of computing. I asked Dr. Michalak his view on this.

“Computer programmers are not just technicians. An air conditioning unit repairman is a technician, even though a good one will know how the air conditioner works. A computer programmer is a god! No, seriously, a good programmer is part linguist (they have to know what language to use to ‘talk’ to the computer in the best way so it will do what they want), part problem solver (the computer is, after all, a machine that helps people solve problems), part UI/UX (user interface/user experience) expert (requiring a good idea about how humans can best interact physically with machines (or with interfaces like keyboards, mouses, and screen/monitors), plus how to make that experience easy and intuitive. A good programmer also likely knows about database design, color theory, graphic arts, and a lot of other fields, although people specialize in each of those areas.

How does it fit into science… Science is observation, hypothesis (it appears that this works this way), collecting and analyzing data to test if the hypothesis is correct, interpreting the results, reporting on what you found to others, and a consensual correcting of how we think things work; ‘rinse and repeat’. Programmers go through the same process by thinking of what they want the computer to do, writing code to make it happen, testing the code, interpreting if it works like it should, sharing the code with others, and other programmers making their programs better from another programmer’s work.”

A closing thought about some of the pioneers of computer science. During World War II, Bletchley Park was England’s cyber-warfare center devoted to decoding German encrypted messages. During this time mathematicians at Bletchley Park and at other locations in England and the US were working on designing computers. While no computer, in the modern sense, was developed at Bletchley Park, people who worked there went on after the war to create them.

Dr. Michalak explains, “Alan Turing and John von Neumann and Claude Shannon were particularly instrumental in coming up with key ideas that led to the modern computer, particularly with how we are able to combine data (like numbers) and instructions (like move this number to there) in the same programming ‘sentence’ when the only thing the computer sees is 1’s and 0’s. For example, humans learn at an early age that 2+2= means that the number 2 (data) is added (an instruction) to another 2 (data). The computer just sees “+” as the number 43 (0101011 in ASCII). When is it a 43 and when is it the ‘add’ instruction? In the right context!”

From my perspective, I see the work done at Bletchley Park as the petri dish from which our modern machines emerged. Also, while it’s the guys who get most of the kudos there were women who played important roles there as well.

References and Resources

- Punched card image from Pete Birkinshaw from Manchester, UK [CC BY 2.0 (https://creativecommons.org/licenses/by/2.0)]

- Basic Programming Concepts and the IBM 1620 Computer, D. N. Leeson & D.L. Dimitry, Holt, Rineheart and Winston, New York, 1962. The 1620 was made from 1959 until 1970.

- In real life, Michalak is Philip Youngblood, Ph.D., who recently retired from the University of the Incarnate Word in San Antonio, Texas where he was the founder and chairman of the Department of Computer Information Systems and Cyber Security Systems.

- Timeline of ‘robotics’ up until 2006. I got a Roomba iRobot carpet cleaner for my birthday. It is controlled from my phone and does an amazing job. My cats are fascinated with it.

- Boston Dynamics is a spin-off company from MIT. They have designed and market a number of robots

- What is Quantum Annealing?, D-Wave Systems, 2015. First of several linked videos discussing quantum computing methods.

- How Does a Quantum Computer Work?, Veritasium, 2013

- Information Theory: What relationship did Claude Shannon have with Alan Turing?, Jimmy Soni, Quora, 2018.

- The Ban and the Bit: Alan Turing, Claude Shannon, and the Entropy Measure, J.S. Adams, Girl Meets Whiskey.

- The extraordinary female codebreakers of Bletchley Park, Sarah Rainey, The Telegraph, 2015.

- Take an interactive tour of an AMD 64-bit processor at the AMD Developer Central Second Life Pavilion.

Visits:1623287